Guidelines are provided here for annotating Speech Turn, Gaze, and Blinking in dyadic conversations. These guidelines have been developed within an ongoing research project run by Fred Cummins in University College Dublin.

The Corpus

The primary data we have worked with come from the IFA Video Dialog Corpus produced by the Nederlandse Taalunie. This corpus provides more than 20 dyadic conversations. Participants in all dyads are well known to each other. They sit opposite one another and converse for 15 minutes on topics of their choice. A video camera is situated behind the head of each participant, providing full facial view of each. Video is recorded at 25 frames/sec. All speech is in Dutch, but the information required for annotation does not require a full command of the language.

In the UCD study, we have examined and annotated data from pairs 1,2,3,4,5,6,7 and 20. Five participants take part in two conversations (Subjects B, C, E, G, and H), while another 6 take part in one conversation only.

Annotation Conventions

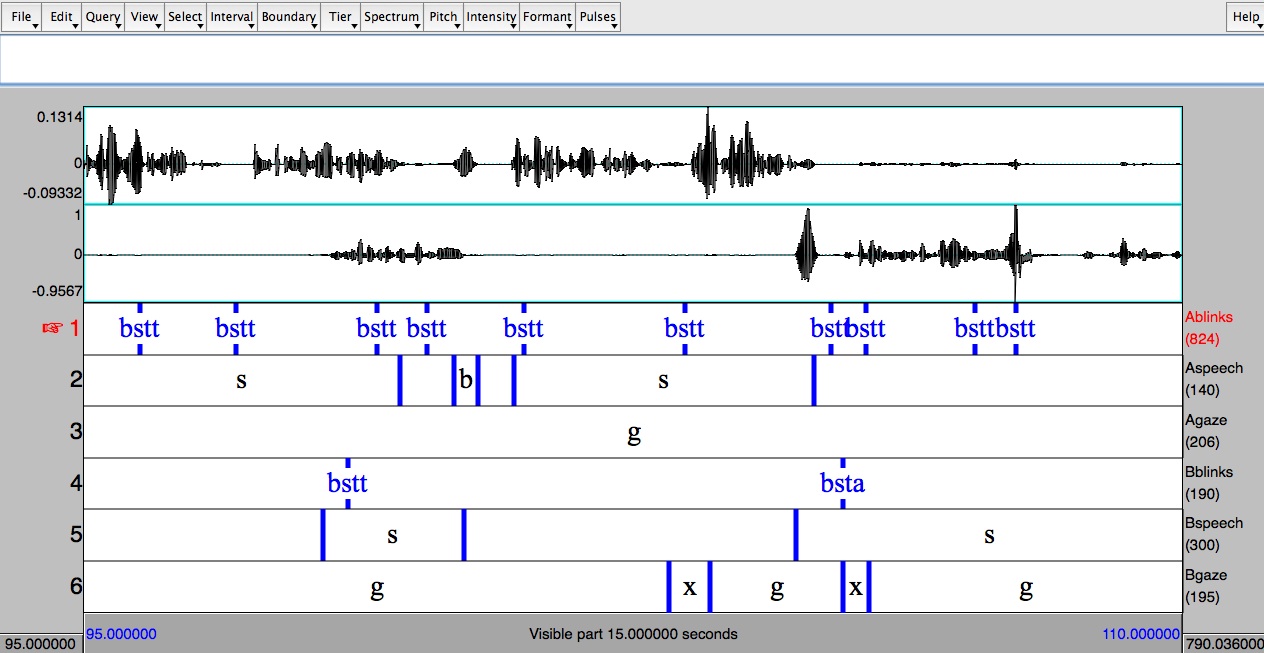

Although some annotations are available from the corpus source, we have chosen to annotate Speaking Turn, Gaze and Blinking together from scratch. Annotation is done using the free and multi-platform Praat programme, while two synchronized videos are presented in Elan.

Sample screen shot from Elan:

Sample screen shot from Praat:

The tool bar above provides links to pages that provide detail about the annotation methods employed. These should be sufficiently detailed to allow replication of the experimental results. If you wish to replicate any part of the study, or to employ these guidelines in your own research, feel free to address questions or provide feedback to Fred Cummins.