The quantitative estimation of synchronization

Introduction

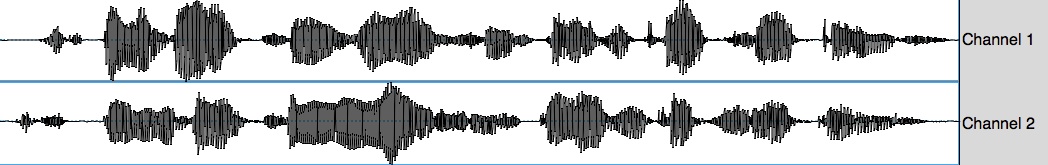

The method described here arose from the desire to assess the extent to which two speakers speaking at the same time were, in fact, synchronized. Figure 1 shows two speech waveforms that were originally captured as the right and left channels of a stereo sound file. How well aligned are these two utterances?

Fig 1: parallel recordings of the sentence "It's been about two years since Davy kept shotguns"

A similar issue may arise if one were to evaluate the consistency of speech production within an individual as they repeat a sentence again and again. To compare two such utterances, one would have to identify a temporal landmark (e.g. the moment of the first vowel onset), and one could then compare two successive utterances, aligning them with the landmark taken as t=0 for each.

A full write up of the procedure employed is provided in this technical report:

Cummins, F. (2007).

The quantitative estimation of asynchrony among concurrent speakers.

Technical Report UCD-CSI-2007-2, School of Computer Science and Informatics, University College Dublin.

Overview

The procedure employed can be summarized as follows:

- Represent each utterance as a sequence of MFCCs, computed for short overlapping time windows

- Use the well known Dynamic Time Warping algorithm to map one utterance (referent) onto the other

- Calculate the amount of warping required, considering only the voiced portions of the referent.

Practical steps to implement the method

Before you start, I suggest you download the zip file asynchrony.zip, uncompress it, and have a look at its contents. It contains all the programs mentioned below, together with the final result.

1: Set up your files

In the example provided here, we have only two utterances to be compared. They are in the folder called "recordings". We will compare these, using the shorter one (utterance2.wav) as the referent, and warping the first (utterance1.wav) onto it.

Step 1: estimate the voiced portions of the utterances. For simplicity we will do this in one fell swoop for all files in the "recordings" folder. I assume that you have both Perl and Praat installed. You will run the script "prepareUtterances.pl" from the command line. Before you do so, edit the script so that it calls Praat in the correct way for your machine. Instructions for that can be found in the Praat documentation.

After doing that, you will have created files in the "ppfiles" folder. There will be two for each speech utterance, one generated by Praat (.pp) and one derived from that by stripping out the header (.ppraw). We will use the latter.

Step 2: use Matlab to map one onto the other and estimate their dissimilarity. For this, we designate one file as the referent, and one as the comparator. A simple way is to take the shorter file as the referent. In our case, this is utterance2.wav. The Matlab function we will use is called "trackSynch.m". It is called thus:

>> trackSynch('recordings/utterance2.wav','recordings/utterance1.wav','ppfiles/utterance2.ppraw');

It will provide a quantitative estimate that is greater as the two files are more dissimilar.

For practical use, you will want to process many files, and customize this for your own set up. That is most easily done by writing a wrapper function for trackSynch.m that will set up the desired paths and filename choices, and that will write the estimate to an output file.

Note: You must have the "voicebox" folder in your matlab path. See the Matlab documentation for details of how to add to your path.